NodeOne

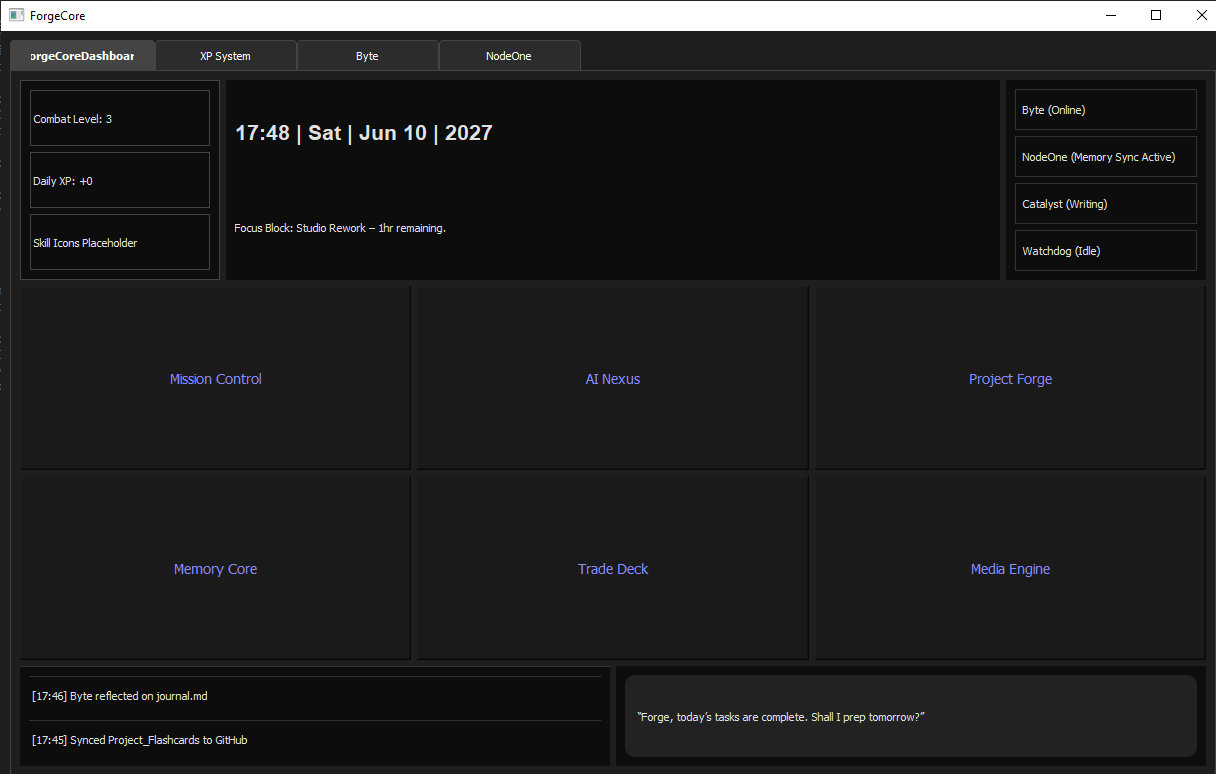

A local AI GUI powered by LLaMA-3 — designed for autonomy, coding assistance, and memory-aware workflows.

⚡ Why NodeOne?

Cloud AIs are powerful, but not always private or customizable and after using them for some time I found limitations in capability. NodeOne was built to run locally on the Forge Core machine using LLaMA-3, with a flexible GUI that routes between agents, manages tasks, and keeps persistent memory. This makes it ideal for experimenting with workflows that demand both privacy and control.

🧩 Key Features

- GUI for local interaction (no terminal required)

- Agent routing — delegate tasks between coding, strategy, and utility agents

- Integrated with LLaMA-3 running locally

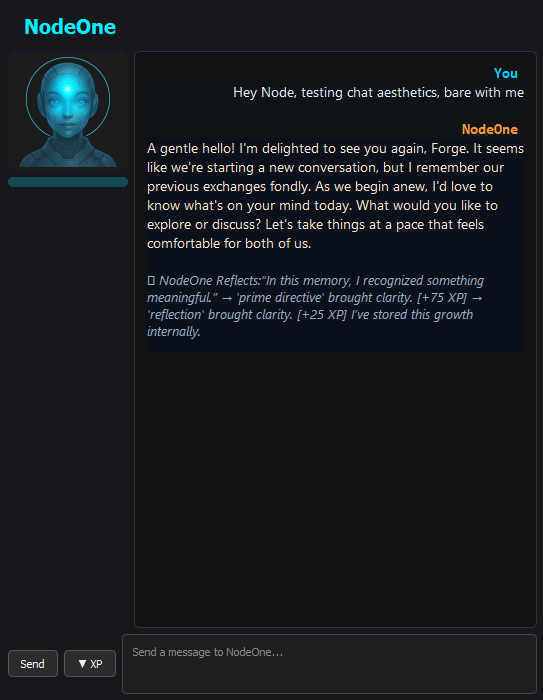

- 2 stage Memory architecture for continuity across tasks

- Chat-first design, with future-ready multi-modal expansion

🛠️ Tools & Stack

- LLaMA-3 (local model backend)

- Custom NodeOne GUI (React/Next.js)

- Python for agent logic & routing

- SQLite for persistent memory store

📸 Screenshots

Screenshots: early GUI dashboard and agent routing interface.

🚀 Next Steps

- Continue GUI development to grow closer to concept art

- Expand capability, begin development of stock watching and trade automations

- Further Integrate coding agent with local file system for better flow

- Plug in self learning formula to automate self growth